ChatGPT isn’t ready for a lot of straightforward human tasks: math, fact checking and — until recently — knowing about current events. HR is no exception. Inspired by my colleague at CFO Dive, I asked ChatGPT a few questions about the demands of HR.

AI is an increasingly hot topic in HR, mainly in the talent acquisition arena and, increasingly, in compliance conversations. While ChatGPT said it is up to the challenge, from compensation and benefits work to L&D, it explicitly told me that it does not want to take HR’s job. In fact, it wants to complement the human side of human resources.

Editor’s note: The following is a Q&A between ChatGPT and HR Dive’s Caroline Colvin. Answers have been edited for length and clarity.

HR DIVE: How can AI help with employee engagement and well-being?

CHATGPT: AI can play a significant role in enhancing employee well-being and engagement in several ways:

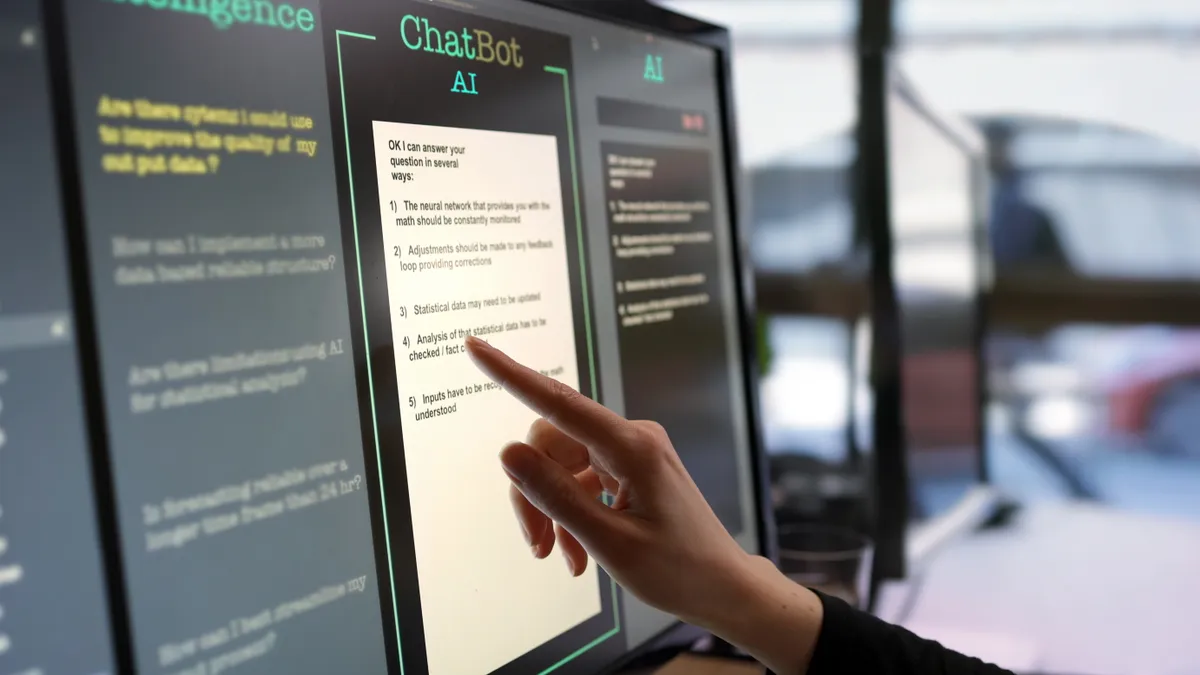

AI-powered tools can collect and analyze data on employees’ well-being, such as stress levels, work patterns and physical activity. With this information, AI algorithms can provide personalized recommendations and interventions to promote better work-life balance, stress management, and overall well-being.

AI chatbots or virtual assistants can provide confidential and easily accessible platforms for employees to seek mental health support. These AI systems can offer resources, provide coping strategies and even conduct basic assessments to identify individuals who may need additional assistance.

[They also] can provide real-time feedback and recognition to employees, fostering a culture of continuous improvement and appreciation. For example, AI can analyze performance data and offer immediate feedback to employees, allowing them to make adjustments and improve their work.

“It’s important to note that while AI can provide valuable support, it should be used as a complement to human interaction and support, rather than a replacement.”

ChatGPT

AI Chatbot

AI algorithms can analyze various employee data points, such as performance, engagement and sentiment, to identify patterns and predict the likelihood of an employee leaving the organization. By identifying potential retention risks, HR can take proactive measures to address issues and improve employee satisfaction.

AI can personalize learning and development experiences by recommending training programs and resources based on individual employee skills, interests and career goals. AI-powered systems can also deliver adaptive learning experiences that adjust content and pacing to optimize employee engagement and knowledge retention.

AI-powered tools, such as virtual meeting assistants and language translation services, can enhance remote collaboration and communication among employees. These tools can improve accessibility, efficiency and inclusivity in virtual work environments.

AI algorithms can analyze historical data, employee skills and workload patterns to optimize workforce planning. This can help HR identify skill gaps, allocate resources effectively, and ensure that employees are appropriately assigned to tasks that align with their abilities and interests.

It's important to note that while AI can provide valuable support, it should be used as a complement to human interaction and support, rather than a replacement. HR professionals should work alongside AI systems to ensure that the technology is ethically deployed and that employees feel supported and valued throughout the process.

Just to clarify, you don't think ChatGPT can replace human HR managers?

While AI-powered systems like ChatGPT can provide valuable support and assistance in various HR tasks, they are not intended to replace human HR managers entirely. HR managers play a crucial role in organizations by providing empathy, critical thinking, and strategic decision-making that may not be replicated by AI systems alone. Human HR managers bring a depth of understanding, contextual knowledge, and emotional intelligence necessary for complex people-related issues.

AI systems like ChatGPT can assist HR managers by automating certain routine tasks, providing data-driven insights, and offering personalized support to employees. They can help streamline processes, enhance efficiency, and augment decision-making. However, there are still important aspects of HR management that require human involvement, such as interpreting and applying employment laws, navigating sensitive employee issues, fostering relationships and exercising judgment in ambiguous situations.

The optimal approach is to leverage AI technology as a tool that complements and enhances the capabilities of HR managers. By combining the strengths of AI and human expertise, organizations can benefit from improved efficiency, better data-driven insights, and more personalized employee experiences while maintaining the human touch and strategic guidance that HR managers provide.

You said it's important to make sure that this technology is “ethically deployed.” What are some HR compliance challenges that come with using AI at work?

The use of AI in HR introduces several compliance challenges that organizations need to address to ensure ethical and responsible deployment. Here are some HR compliance challenges associated with AI at work:

1. Data Privacy and Security: AI systems in HR rely on vast amounts of employee data, including personal information, performance metrics and health-related data. Organizations must ensure that data collection, storage, and usage comply with applicable data privacy regulations, such as the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA). Safeguards must be in place to protect sensitive employee data from unauthorized access or breaches.

2. Bias and Discrimination: AI algorithms can inadvertently perpetuate biases present in historical data, leading to unfair or discriminatory outcomes. HR teams need to carefully assess and monitor AI systems to ensure they are free from biases and treat employees fairly. This involves ongoing evaluation of the training data, testing for disparate impact, and implementing measures to mitigate bias throughout the AI system’s life cycle.

3. Transparency and Explainability: AI systems often operate as “black boxes,” making it challenging to understand how they arrive at decisions or recommendations. However, employees have the right to understand the basis of decisions that impact their employment. Organizations must strive for transparency and develop methods to explain AI-generated outcomes to employees in a clear and understandable manner.

4. Compliance with Employment Laws: AI systems must align with employment laws and regulations. This includes ensuring compliance with anti-discrimination laws, fair hiring practices and regulations regarding employee monitoring and surveillance. Organizations need to carefully assess how AI is used in recruitment, performance management, and other HR processes to avoid legal pitfalls and ensure fairness.

5. Informed Consent and Employee Rights: Organizations should obtain informed consent from employees regarding the collection and use of their data for AI purposes. Employees should be informed about how AI systems are being used, the potential impact on their employment, and their rights related to data privacy, transparency, and decision-making.

6. Ethical Use of AI: HR teams must establish guidelines and policies for the ethical use of AI in the workplace. This involves defining clear boundaries for AI applications, ensuring algorithms are used responsibly, and addressing potential ethical dilemmas, such as AI-driven employee monitoring or intrusive data collection.

Addressing these compliance challenges requires a collaborative effort between HR, legal and IT teams. Organizations should conduct regular audits, implement governance frameworks, and involve experts to ensure that AI systems are deployed ethically and in accordance with legal and regulatory requirements.

Speaking of bias and discrimination, many have warned against using AI in hiring because of potential racial, class and gender biases in decision-making. Do you think there’s an AI-friendly solution here?

Addressing biases in AI-based hiring processes is indeed crucial to ensure fair and equitable outcomes. While no solution is entirely foolproof, there are several approaches organizations can take to mitigate bias in AI-based hiring:

1. Diverse and Representative Training Data: One of the primary causes of bias in AI systems is biased training data. Organizations should strive to use diverse and representative data when training AI models. This can help minimize the risk of perpetuating biases present in the data.

2. Bias Testing and Evaluation: Regular testing and evaluation of AI models for bias are essential. This involves analyzing the impact of AI algorithms on different demographic groups to identify and address any disparate impact. Testing methodologies, such as fairness metrics and audit procedures, can help assess and measure bias in AI models.

3. Bias Mitigation Techniques: Employing bias mitigation techniques specifically designed for AI systems can help reduce discriminatory outcomes. Techniques such as pre-processing, in-processing, and post-processing can be applied to modify data or adjust algorithm outputs to promote fairness and reduce bias.

4. Transparency and Explainability: Organizations should strive for transparency and explainability in AI-based hiring systems. Candidates should be informed about the use of AI in the selection process and provided with clear explanations of how decisions are made. Transparent communication can help build trust and allow individuals to better understand and challenge any potential biases.

5. Ongoing Monitoring and Auditing: Regular monitoring and auditing of AI systems are necessary to identify and rectify biases that may emerge over time. This includes evaluating the performance and impact of AI models and making necessary adjustments to ensure fairness and equity.

6. Human Oversight and Judgment: While AI can assist in the hiring process, human oversight and judgment remain crucial. HR professionals should actively review and validate AI-generated recommendations, ensuring they align with organizational values and legal requirements. Human decision-making can provide additional context, consider individual circumstances, and account for factors that AI may not fully capture.

It’s important to note that the responsibility for fair and unbiased hiring practices ultimately lies with the organization. While AI can be a powerful tool, it should be used as an aid to support human decision-making rather than as a sole determinant. Organizations must remain vigilant, continuously assess AI systems and adapt their approaches to minimize bias and discrimination in the hiring process.